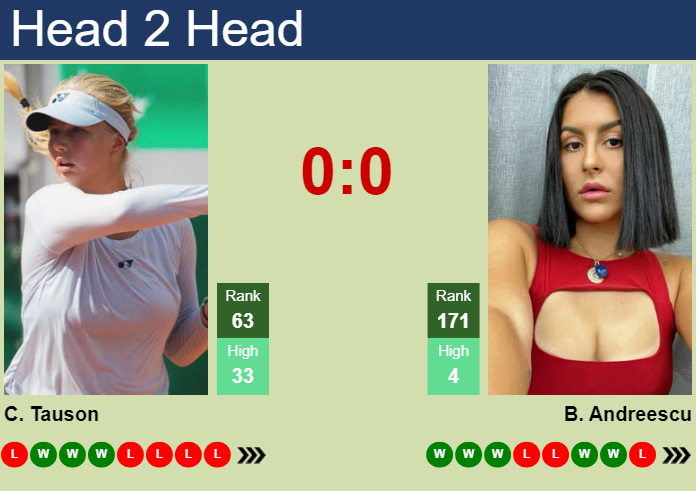

Okay, so today I’m gonna walk you through my little experiment: andreescu vs tauson. I was messing around with some tennis data, trying to see if I could predict match outcomes. Don’t get me wrong, I’m no sports analyst, just a guy who likes to tinker with code and data.

First thing I did was grab a bunch of match data. I scoured the internet, found a few decent datasets with player stats and match results. It was kinda messy, you know, different formats, missing data… the usual headache. Spent a good chunk of time cleaning it up, standardizing everything. That’s always the most tedious part, right?

Next up, feature engineering. Basically, I wanted to create some meaningful metrics from the raw data. Things like win ratios, head-to-head records, recent performance. I even tried to factor in things like court surface and tournament importance. This is where it got interesting. I started seeing some patterns, some players seemed to consistently outperform others on certain surfaces, or against specific opponents.

Then came the model selection. I figured I’d keep it simple, nothing too fancy. Started with a logistic regression, just to get a baseline. Then I tried a random forest, see if it could pick up on more complex relationships. I split the data into training and testing sets, trained the models, and evaluated their performance.

The results? Well, they weren’t exactly groundbreaking. The models were able to predict the winner with around 65-70% accuracy. Not bad, but not exactly betting-the-house material. What I did notice, though, was that certain features were more important than others. Head-to-head record, for example, seemed to be a pretty strong indicator.

Now, back to the andreescu vs tauson thing. I fed the model some data about their past matches, their recent form, all the features I had engineered. The model spat out a prediction. I won’t tell you who it picked, because honestly, the whole point was the process, not the specific outcome. It was more about seeing if I could build something that could learn from the data and make reasonable predictions.

Of course, there’s tons of room for improvement. I could try different models, more sophisticated feature engineering, incorporate more data sources. Maybe even factor in things like player fatigue or psychological factors. But for now, I’m pretty happy with what I’ve got. It was a fun little project, and I learned a lot along the way.

Here’s a quick recap of the steps I took:

- Data Acquisition: Hunted down and downloaded relevant tennis match data.

- Data Cleaning: Wrangled the data into a usable format, dealing with missing values and inconsistencies.

- Feature Engineering: Created meaningful features like win ratios and head-to-head stats.

- Model Selection: Chose and implemented logistic regression and random forest models.

- Training and Evaluation: Trained the models on a training set and evaluated their performance on a testing set.

- Prediction: Used the model to predict the outcome of a specific match (andreescu vs tauson).

Would I use this to bet my life savings on a tennis match? Absolutely not. But it’s a cool example of how you can use data and machine learning to try and understand the world around you. And hey, maybe with enough tweaking, I could actually make a few bucks!