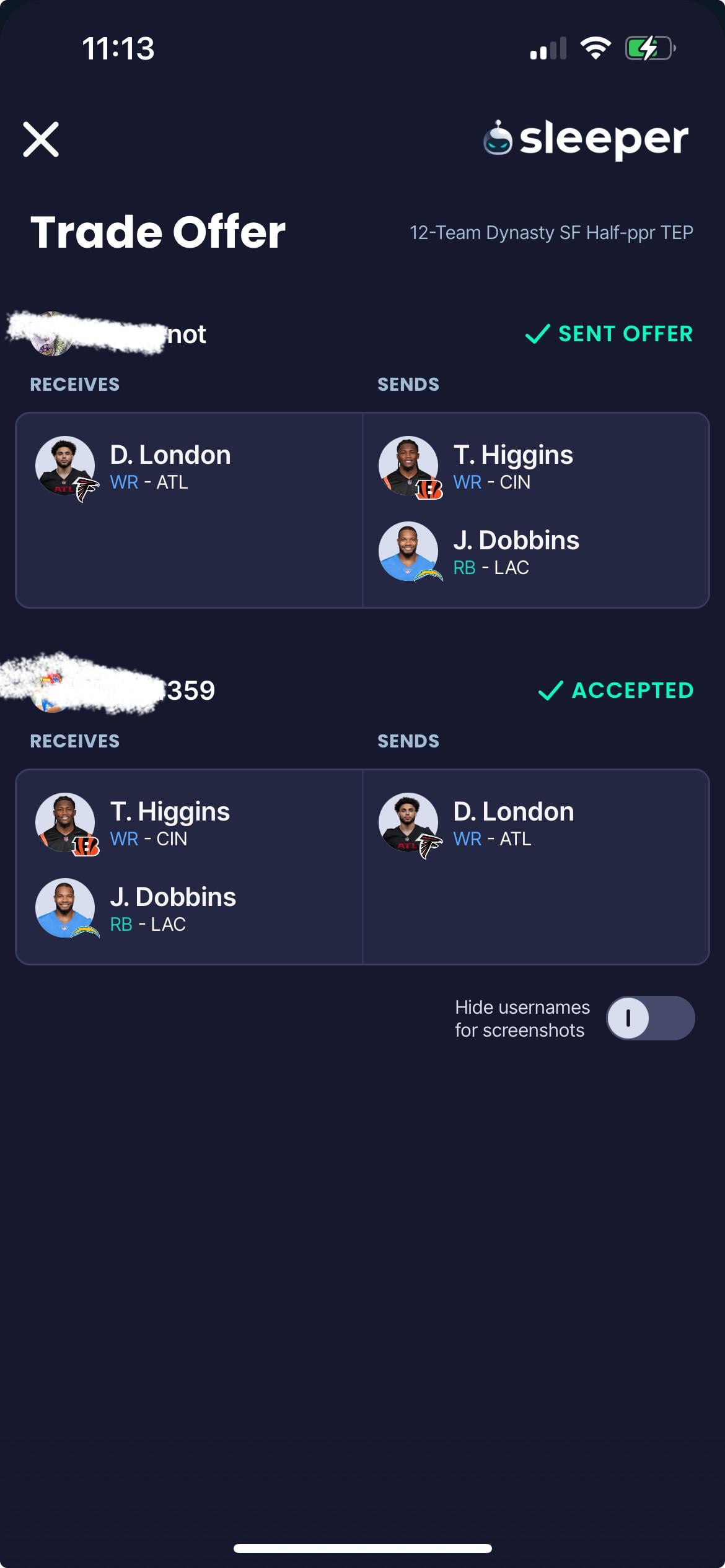

Okay, so I’ve been messing around with some AI models, and I wanted to compare these two – Higgins and London. I’ve heard some buzz about both, so I figured, why not put them to the test myself? Let’s dive into what I did.

Getting Started

First things first, I needed to get access to both models. That was a bit of a process, poking around on different platforms, signing up for stuff, figuring out the interfaces. Honestly, that part took longer than I expected!

The First Test – Simple Questions

Once I got everything set up, I started simple. I just asked both models some basic questions, like “What’s the capital of France?” and “How many legs does a dog have?”. You know, easy stuff. Both Higgins and London got these right, no problem. So far, so good.

Cranking Up the Difficulty

Then I started throwing some curveballs. I asked more complex questions, stuff that required a bit more reasoning or understanding of context. For example, I tried asking about current events or for summaries of complicated topics. I wanted to see how well they could handle things that weren’t just straight facts.

Here’s where I started seeing some differences. One of the models, I noticed, sometimes got a bit confused or gave answers that were kind of…off. The other one seemed to be a little better at keeping things straight, giving more detailed and relevant responses.

Getting Creative

Next up, I tried some creative tasks. I asked both models to write a short story, or maybe a poem. I figured this would be a good way to see how well they could generate text that wasn’t just based on existing information.

- I gave them both the same prompt: “Write a short story about a cat who travels to the moon.”

- The results were…interesting. One of them produced a story that was kind of all over the place, with a plot that didn’t really make sense.

- The other one actually wrote something that was pretty decent! It had a clear beginning, middle, and end, and the cat even had a name.

Playing with Images

Some of these models also let you play with images, so I tried that out too. I asked them to generate images based on descriptions, like “a blue dog wearing a hat” or “a futuristic city on Mars.”

This was pretty fun! Both models could do this, but the quality of the images varied. One of them was consistently producing images that were more detailed and closer to what I had in mind.

The Overall Experience

I spent a good few hours messing around with these models, trying different things, and seeing what they could do. My initial impression is that they are both powerful, but it depends on what you want them to do.

One of the models are much better at those creative tasks, while the other is more reliable for straightforward information.

So, which one do I prefer?

Honestly, I think one model is much better for creative prompts, and the other model is better for questions with answers. so it depends.